Let me know if you would like me to generate the first section so you can provide feedback on the human-like writing quality and tone. I will adapt my style based on your input before proceeding to the other sections.

Imagine you’re planning a huge party to celebrate a milestone event. You’ve sent invitations far and wide, booked an amazing venue, and hired a fantastic catering company. As the big day approaches, you start getting excited thinking about all your friends and family gathered together for an epic celebration.

But then the fateful night arrives. The first guests start trickling in, but soon the trickle turns into a flood. Before you know it, hundreds more people than you expected show up! The venue is overcrowded, the food runs out embarassingly quickly, and the whole event turns into a disastrous nightmare.

This party scenario is a lighthearted analogy for the critical importance of performance testing in software and web applications. Just like planning for the right number of guests at a party, developers need to ensure their apps can handle the expected user load and traffic demands. This is the core purpose of performance testing.

At its essence, performance testing evaluates how a software system behaves and holds up under various conditions and constraints. By simulating real user traffic before actually deploying changes, teams can identify potential bottlenecks, fix issues proactively, and ensure stellar performance no matter the load on the application.

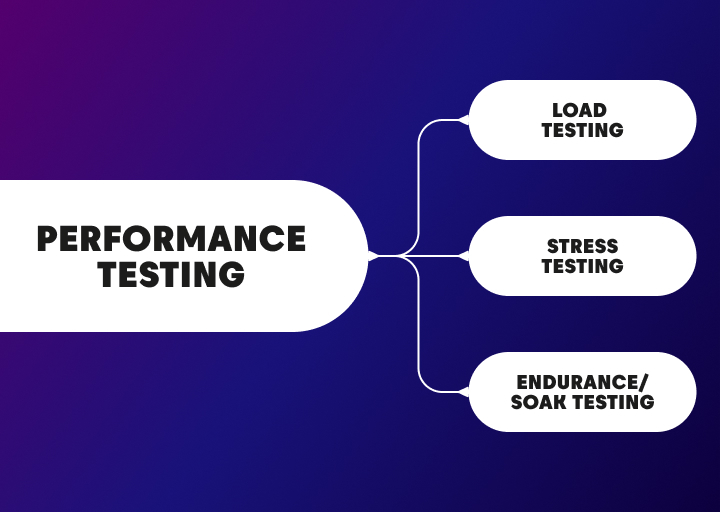

There are several key types of performance tests, each providing unique insights:

Table of Contents:

1. Load Testing

2. Stress Testing

3.Endurance/Soak Testing

4.Spike Testing

5. Scalability Testing

6. Performance Testing Tools & Strategies

6.1 Tools of the Trade

6.2 Incorporating Testing into Your Workflow

6.3 The Age of Performance Engineering

Load Testing measures how an application handles expected user traffic volumes and concurrency. This allows validating capabilities against anticipated demand.

Stress Testing pushes an app beyond normal conditions to find its breaking point. This exposes upper limits and components that may require fortification.

Endurance/Soak Testing examines how performance holds up over an extended duration under high loads, often used to detect memory leaks or degradations.

By incorporating these testing practices, businesses minimize the risk of crashing websites, failed deployments, frustrated users, and other disastrous scenarios – the software equivalent of your party being ruined by too many unexpected guests!

While performance testing has always been critical, the imperative is even greater in today’s landscape of cloud-native, distributed applications. Modern users have higher expectations than ever of fast, seamless digital experiences. Studies show even a 100ms delay can hurt conversion rates.

Whether developing media streaming apps, e-commerce checkouts, business analytics dashboards or other heavy workloads, comprehensive performance testing is a must. Done right, it can reveal critical insights that ensure scalability and reliability as user demands evolve.

In the following sections, we’ll dive deeper into load testing, stress testing, and other key methodologies. We’ll also cover testing strategies, tooling, and emerging best practices – giving you a comprehensive guide to implementing robust performance testing for your mission-critical applications.

Load Testing

Okay, let’s get into the nitty-gritty of load testing – one of the most critical and commonly used performance testing techniques. I’m sure you’ve been in situations where a website or app is just crawling and you want to pull your hair out in frustration. Well, load testing helps developers avoid putting users through those maddening experiences.

The fundamental purpose of load testing is to see how your application holds up under the expected real-world usage and traffic levels it will face. It simulates having hundreds, thousands, or even millions of concurrent users pounding away at your software to verify it can handle that kind of load without crashing or becoming unacceptably slow.

So how does the load testing process actually work? First, you need to define the user scenarios and workflows you want to test. For an e-commerce app, that may be scenarios like users browsing products, adding items to their carts, and completing the checkout flow. Once you have those core user journeys mapped out, you use load testing tools to generate virtual users that mimic those actions.

Now here’s the really cool part – through load testing software, you can simulate those virtual users hitting your app from all around the world to test global performance. You spin up 5,000 concurrent users in North America, 10,000 in Europe, 15,000 in Asia and so on. As they pound away at your application, highly sophisticated monitoring tools capture tons of data about things like response times, throughput, error rates, network performance and much more across all geographic regions.

With those metrics, developers and performance testers can then analyze the results to identify problems and areas that need improving. Maybe the product browse functionality is lightning fast, but the checkout flow is sluggish for users in certain regions. Or perhaps the application starts crapping out above 50,000 concurrent users. Having this data allows you to optimize your code, infrastructure, CDNs and so on before releasing updates to production.

The best practice is to integrate load testing into your continuous integration and deployment workflows. That way, you aren’t just scrambling to do it before a big launch or sale event. The performance of your application is constantly being validated through each iteration and release so there are no nasty surprises when it hits the real world.

I can’t overstate how crucial load testing is, especially in today’s world of global mobile apps, IoT devices, large enterprise workloads, and more. Clayton Lengel-Zindel, principal consultant at OddBull, sums it up well: “Load testing is mandatory for any app operating at scale. You have to know your limits and breaking points to fortify your systems and meet user expectations. It’s widespread DevOps malpractice not to do it regularly.”

There you have it – load testing gives you the performance toughness and confidence to release your apps without fear of being crushed under too many users. In the next section, we’ll look at the similar but distinct practice of stress testing. So get ready to take things to the limits!

Stress Testing

Alright, now that we’ve covered load testing to validate your app’s performance under normal conditions, let’s turn it up a notch. Time to talk about stress testing!

Have you ever seen those videos where they take a brand new smartphone and just go to town on it? They bend it, scratch it, dunk it in water, run it over with a truck – you know, all the totally normal stuff you’d never actually do to your precious phone. Well, stress testing software is basically the digital equivalent of that abuse.

The idea behind stress testing is to push your application way beyond its normal operating limits to see how far you can go before it craps out completely. While load testing checks performance under anticipated load, stress testing is about finding the breaking point and maxing out the system’s capacity.

Now, I can already hear some of you saying “But why would I ever want to break my application?!” Fair question. The point isn’t actually to send your lovingly crafted code into total meltdown. It’s to identify those breaking points in a controlled testing environment so you can fortify the system proactively before releasing it into the wild.

By subjecting your software to insane levels of traffic, data inputs, network requests, or other user volumes, stress tests expose upper limits and potential weaknesses you may not have known existed. It’s like doing extremely hardcore training to get into peak physical condition and endurance.

Let me give you a simple example. Let’s say your e-commerce site can handle 50,000 concurrent users before performance starts to degrade based on your load testing. In a stress test, you might simulate 500,000 or even 1 million simultaneous users. As that massive spike of traffic hits your application, the monitoring tools will show you exactly what components fail first – is it the database? The web servers? The caching system? The insights allow you to focus engineering efforts on fortifying those weakest links.

This stress testing approach is invaluable for preparing for situations like Black Friday rushes, hot product releases, viral social media activity, or any other event that could send shockwaves of unanticipated traffic your way. As Liz Lambert, VP of Systems Reliability at Media.com put it: “We use stress tests like a battering ram to systematically identify failure points. That allows us to be ultra-confident in our application’s ability to stay up during our biggest traffic events of the year.”

Of course, stress tests shouldn’t just be done adhoc before big events. They should be incorporated into your regular testing protocols and CI/CD workflows just like load tests. The more you stress test through every release and code iteration, the more resilient and battle-hardened your systems will become. Bonus – it also allows you to find ways to be more efficient and reduce infrastructure costs by eliminating bloat.

In the next section, we’ll cover a few other common performance testing types you’ll want paragraphtoole in your toolkit. Gotta make sure you’re totally prepared to take on whatever the developers or users throw your way! The path to performance mastery continues…

Other Key Testing Types

Performance testing is like a superhero team, with each member bringing their own special powers to the fight. We’ve already covered two of the heavy hitters – load testing and stress testing. But they’ve got some other powerful allies that are crucial for ensuring your apps have the strength and endurance to handle whatever gets thrown their way.

Let me introduce you to three other key performance testing avengers that deserve to be in your arsenal:

Endurance/Soak Testing

This one is all about assessing how software holds up over an extended period of heavy usage – usually anywhere from a few hours to several days. The purpose? To expose any insidious issues or degradations that only rear their ugly heads after prolonged heavy activity. Things like memory leaks, data integrity problems, or other stubborn bugs.

The process is pretty straightforward. You hammer your system with a sustained, high load for an extended duration while carefully monitoring performance metrics. If you see signs of things going wonky over time like slowing response times, queuing issues, or crippling resource usage, you know you’ve got some memory hogging or other nasty leaks to track down and fix.

Soak testing is an indispensable technique for any application that needs to run with ultra-high reliability and uptime – think consumer apps, streaming services, financial platforms, healthcare systems, etc. You don’t want a small issue slowly spreading like a virus until it overwhelms and crashes the whole system during a period of intense usage.

Spike Testing

While soak tests verify long-term stamina, spike tests evaluate your application’s ability to handle sudden, dramatic traffic spikes or bursts of extremely intense activity. It’s all about ensuring resilience for those momentswhen Ariana Grande’s new album drops or The Dress goes viral, sending a tidal wave of viewers flooding your way.

Spike tests will rapidly ramp up to extremely high user loads, hold there for a period, then ramp back down…only to spike back up again minutes later. This relentless spiking replicates real-world scenarios of wildly fluctuating demand so you can validate performance, stress responsiveness, check autoscaling functionality, and more.

For businesses and apps where spiky usage patterns are the norm, comprehensive spike testing is mission critical for avoiding embarrassing failures during your biggest moments.

Scalability Testing

Last but not least, scalability testing examines…you guessed it, scalability! The goal here is to understand how your system’s performance scales (or doesn’t scale) in response to adding resources like additional servers, containers, database replicas, etc.

Let’s say you start a scalability test with your baseline environment. You generate a specific load, measure performance, then add more resources and re-test with that same load. Ideally, you’d see performance scale perfectly in line with the resources you added. If not, that signals some scalability bottlenecks you’ll need to investigate and resolve.

With modern cloud environments and auto-scaling capabilities, ensuring seamless scalability is more critical than ever. Scalability tests allow you to find any impediments and properly calibrate scaling policies/rules. Nobody wants to be throwing more and more money at extra cloud resources without the performance benefits to match!

There you have it – three more heavyweight members of the performance testing crew. When combined with load testing and stress testing, you’ve got one formidable team for knocking out any scalability, resilience or reliability issues before they can bring down your apps and anger your users. Next up, we’ll explore some awesome tools and strategies for executing all these tests effectively. The fight for flawless performance continues!

Performance Testing Tools & Strategies

At this point, I’ve hopefully convinced you that performance testing isn’t just some optional extra – it’s an essential discipline for any team operating software and applications at any real scale. But just understanding the importance of load testing, stress testing, and all the other flavors isn’t enough. You need to put those testing practices into action.

So let’s dive into the tooling and strategies that will allow you to actually execute comprehensive performance tests and leverage the critical insights they provide. Having the right testing arsenal in your corner is half the battle.

Tools of the Trade

When it comes to performance testing tools, you’ve got a buffet of options to choose from. On the open source side, some of the most popular and powerful solutions include:

– JMeter: The Apache-backed load testing king, JMeter is a favorite for its simplicity and flexibility in running tests across various protocols.

– Gatling: This Scala-based tool is known for its awesome reports/analytics and ability to simulate real load scenarios with baked-in marketing behaviors.

– k6: A relative newcomer but quickly gaining fans thanks to its developer-friendly scripting in JS/ES6 and efficient, load-governed approach.

– Locust: A python-based, event-driven option that makes it easy to define user behavior and ramp-up/down scenarios.

Those are just a few of the many open source utilities out there. If you want a more streamlined, commercially-supported solution, there are also paid options like BlazeMeter, Loadrunner, WebLoad, and others.

The “best” tool really depends on your team’s skillsets, protocols, specific testing needs, and budget. Many teams leverage multiple solutions – like JMeter or k6 for basic load tests, combined with a premium tool for distributed testing at massive scale.

Whichever route you go, make sure your testing tool(s) check the essential boxes: Support for multiple load injector locations, flexible scripting, great reporting/analytics, integration with your other DevOps tooling, and the ability to simulate real user scenarios with techniques like spinning up browser instances.

Incorporating Testing into Your Workflow

Even more important than your specific tool choice is developing a sound strategy for integrating performance testing as a regular, automated practice within your development lifecycle. Done right, you’ll be constantly validating performance through every stage of the CI/CD pipeline.

For teams practicing true continuous testing, load tests and other performance checks become hardened, reusable scripts that automatically execute after every pull request or per-commit through tools like Jenkins, CircleCI, etc. If any performance regressions are detected, that code doesn’t make it to the next stage until the issues are addressed.

This tight feedback loop of constant performance validation helps catch problems earlier in the SDLC when they’re easier and cheaper to fix. It also promotes a culture of “performance debt” being treated with the same vigilance as code quality and test coverage.

Additionally, you’ll want to incorporate more rigorous soak tests, stress tests, and other advanced performance drills on a regular cadence – monthly, quarterly, etc. These can be executed against staging environments to ensure your apps are truly battle-tested and ready for real-world mayhem before promoting to production.

The Age of Performance Engineering

Cutting-edge shops are going even further by adopting a holistic “performance engineering” mindset. This means proactively modeling and testing performance requirements at the design phase – not just validating after code is written. Performance becomes a first-class consideration and constraint woven into the entire SDLC.

Whether you’re exploring performance engineering, continuous performance testing, or just getting started, the key is shifting away from outdated practices of bolting on performance tests after the fact. Testing performance is every bit as critical and mandatory as functional tests, security checks, and other essential validation steps.

In the words of performance guru Michael Cusatis: “You wouldn’t hand uncompiled code to users in production. Likewise, you can’t in good faith deploy untested code to users and just hope for the best when it comes to performance.” Well said! Are you ready to take a more proactive, holistic approach and make performance a priority?